Biorobotics – the augmentation of the human body with robotics – has been a recurring theme in science fiction for decades, inspiring the popular Iron Man series among many others. Lately though, it’s gone from interesting idea to real-world science with staggering potential.

Robotics technologies have advanced exponentially in recent years, and turning science fiction into science fact is no longer limited by robotic capabilities, but rather by our understanding of the human interface. The operation of the human body is still more complicated than that of any robot.

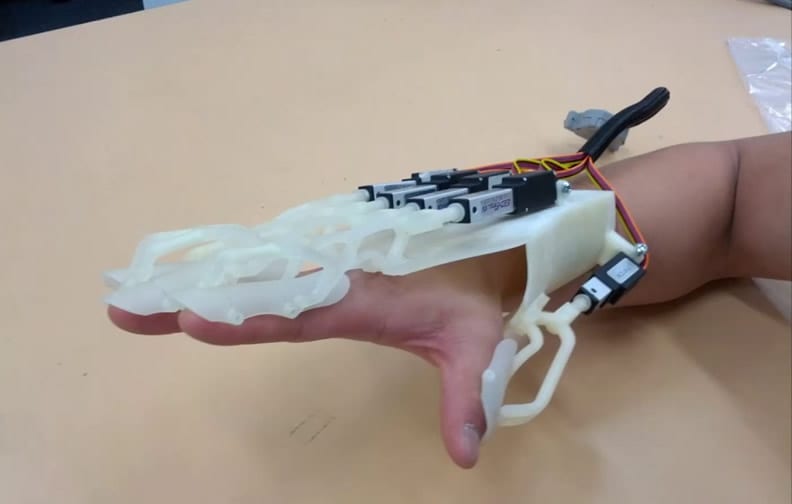

Replicating, supporting or augmenting the movement of a hand for instance, while still allowing the full range of human movement and sensation, is not an easy thing. Dr Lei Cui, Head of the Biorobotics Research Lab in Curtin’s Department of Mechanical Engineering, is at the centre of a research team developing a full hand orthosis. It started with a customised, 3D printed plastic ‘superskeleton’ attached to a single finger. This could allow, support or control the full range of finger movement (all three knuckles) with a single small linear motor.

Working with Professor Garry Allison, Professor of Neurosciences and Trauma Physiotherapy in Curtin’s Faculty of Health Sciences, the finger orthosis was developed as a tool to increase dexterity and mobility for people with impaired or reduced manual function, such as stroke patients and people with spinal cord injuries or neurodegenerative diseases. It subsequently found a potential application in post-surgical rehabilitation after finger tendon repair; supporting, controlling and gradually increasing the available range of movement as the finger heals after surgery.

Cui’s team has since extended the single finger orthosis to a full hand orthosis, capable of independently supporting or controlling the movement of all four fingers. “We only started developing the mechanism for the thumb one year ago”, says Cui. “The thumb has a very complex kinematic structure, with many more degrees of freedom, or possible directions of movement, than the other fingers.” Its independent operation, and strength of interaction with the other fingers, is pivotal to our manual dexterity.

The intention is to use the full hand orthosis to assist people with weak fingers, particularly those with neurodegenerative issues, to continue to manage their daily activities. The next stage of design is focused on training the orthosis to reproduce specific movements independently, such as grasping a water bottle or turning a door knob. The team is currently analysing normal hand biomechanics during various activities, using surface electromyography to measure the electrical impulses generated by the various muscles as they move. Mapping the human body’s own biomechanical and electrical inputs onto the robotic hardware to control movement remains the biggest research challenge.

As another extension of the customised, 3D printed exoskeleton approach using robotics to support and augment movement, Cui’s team has also developed a leg orthosis. Its focus is on controlling the hip and knee joints (ankles are complicated, like thumbs), and replicating normal walking movement, again for rehabilitation of people with neurodegenerative issues, spinal injuries, or after a stroke.

In parallel with Cui’s work on supporting walking movement, Allison’s team has been studying how we balance. “It is a fairly fundamental skill in controlling all types of movement,” explains Allison, “from young people learning new skills, to elderly people avoiding falls”.

Three separate inputs are used to maintain balance: feedback from your eyes as you move, feedback from your vestibular system – the semicircular canals of the inner ear, and feedback from your muscles and tendons as you interact with a surface.

Allison originally asked Cui’s team to build a balance platform that could be controlled to move up and down, rotate and tilt in all directions, to study ankle stiffness in athletes and for lower limb balance rehabilitation. Their collaboration has since explored further possibilities at the nexus of biomechanics and robotics. By incorporating a force plate into the balance platform (to measure the force exerted by the person standing or sitting on it), the platform can be programmed to react to the user’s movement: it can effectively be ‘driven’ by the user, much like a surfboard. Lean into one side and the platform will tilt.

While similar to some commercial products available (like the Wii balance board and some sophisticated immersive arcade games), Allison’s balance board can move through all three degrees of freedom, is “robust enough for 110 kg footballers to jump up and down on it”, and has no discernible lag in its reaction time. It can also be programmed for a variety of response characteristics: it can react as if you’re balancing on a surfboard on water, on treacle, on a very stiff spring, or on a sponge, for example.

Again, it’s at the human interface that things get interesting. People on the balance board react based on the feedback of their muscles and tendons, their eyes, and their inner ears. Allison and Cui intend to decouple those stimuli, to better understand what affects balance. They are now incorporating virtual reality headsets to independently control the wearer’s visual perception of ground level, up and down.

Early studies have suggested that immersing the mind in a simple virtual world (green grass, blue sky, flat horizon) can help improve balance rehabilitation and movement, possibly through reducing the visual stimuli to only the bare essentials for retraining. But the possibilities are endless.

As Allison details, “if we can control the visual line of horizon, and independently move the platform as well, we can start separating out some of the neural processing and cognitive mechanisms associated with maintaining good balance. Being able to decouple the inputs and seeing how people react to them means we can look for more specific diagnostic and training opportunities.”

For example, Allison’s colleague Dr Sue Morris is leading a team that has recently shown that the role of peripheral vision in maintaining balance varies between people who are on the autism spectrum and those who are not. Perhaps this is not surprising, as many of the experiences common to people on the autism spectrum relate to their processing of visual cues. Using the balance platform to decouple the physical and visual feedback may in this case provide more insight into any differences in neural processing associated with autism, for both diagnosis and therapy.

Equally, conflicting visual and physical cues are the most common cause of motion sickness, so being able to decouple and control both inputs may lead to insights and therapies for those of us who can’t read a book on the bus or contemplate a boat trip.

From better understanding why we fall over or suffer from motion sickness, to regaining function in limbs affected by disease or injury, research at the interface between humans and robotics has the potential to create ‘super’ humans – those capable of all of the amazing feats that the healthy human body can perform, and that so many of us take for granted.